hadoop单机版和集群搭建

环境介绍:

系统: centos7

java: jdk-8u181-linux-x64

软件包存放:/data/

解压目录:/opt/

hadoop: hadoop-2.6.5.tar.gz

hive: apache-hive-2.3.4-bin.tar.gz

服务器的防火墙要关闭,并禁止开机自启

系统java环境变量配置:

-

解压java压缩包

tar -zxvf jdk-8u181-linux-x64.tar.gz -C /opt/ -

配置环境变量

vi /etc/profile export JAVA_HOME=/opt/jdk1.8.0_181 export JRE_HOME=${JAVA_HOME}/jre export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib export PATH=${JAVA_HOME}/bin:$PATH source /etc/profile搭建hadoop

-

解压hadoop压缩包

tar -zxvf hadoop-2.6.5.tar.gz -C /opt/ -

配置hadoop环境变量

vi /etc/profile export HADOOP_HOME=/opt/hadoop-2.6.5 export PATH=$PATH:$HADOOP_HOME/bin source /etc/profile -

配置hadoop

3.1 配置hadoop-env.shvi /opt/hadoop-2.6.5/etc/hadoop/hadoop-env.sh # The java implementation to use. export JAVA_HOME=${JAVA_HOME}替换后

export JAVA_HOME=/opt/jdk1.8.0_181配置yarn-env.sh

export JAVA_HOME=/opt/jdk1.8.0_1813.2 配置core-site.xml;file是hadoop路径,hdfs:是虚拟机ip

vi /opt/hadoop-2.6.5/etc/hadoop/core-site.xml <configuration> <property> <name>hadoop.tmp.dir</name> <value>/opt/hadoop-2.6.5/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://172.16.161.150:8888</value> </property> <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> </configuration>3.3 配置hdfs-site.xml

vi /opt/hadoop-2.6.5/etc/hadoop/hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/opt/hadoop-2.6.5/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/opt/hadoop-2.6.5/tmp/dfs/data</value> </property> </configuration> -

配置ssh免密登录

ssh-keygen -t rsa 虚拟机没有.ssh目录 #本机的id_rsa.pub,复制到虚拟机 ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.161.150 cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys -

Hadoop启动和停止

5.1 首次需要初始化

cd /opt/hadoop-2.6.5 ./bin/hdfs namenode -format5.2 启动

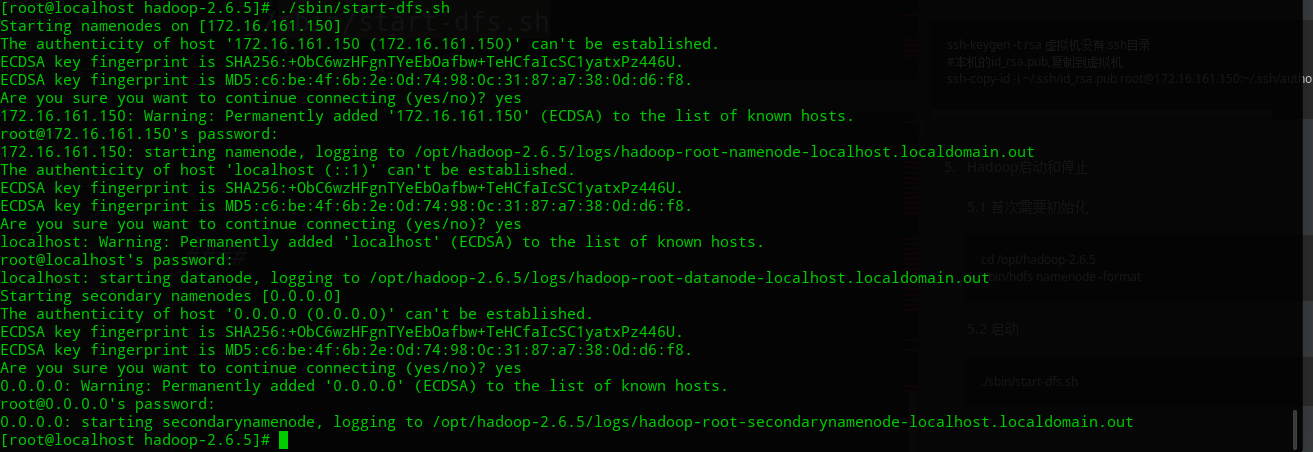

./sbin/start-dfs.sh

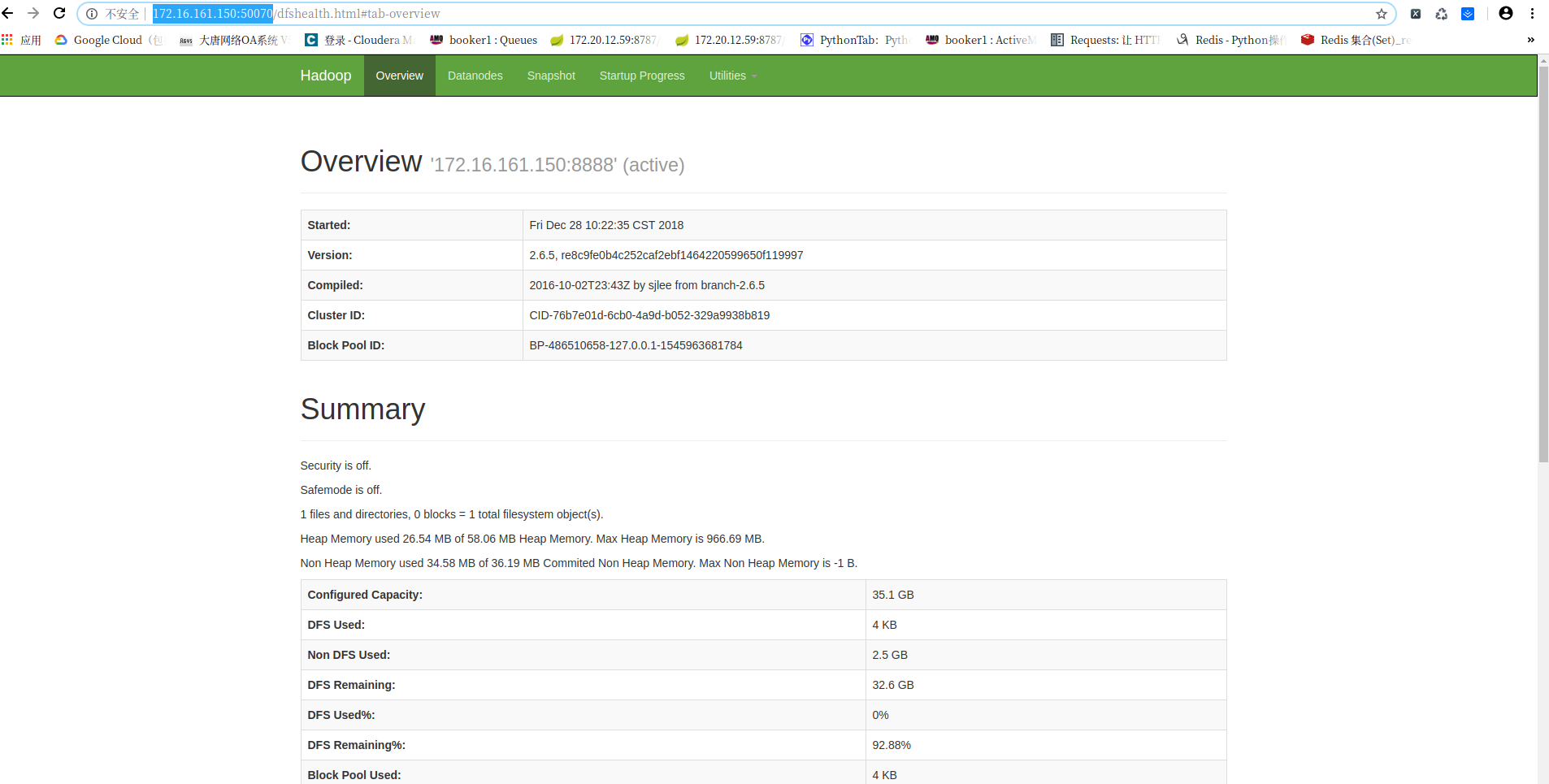

浏览器输入:http://172.16.161.150:50070

命令行输入:hadoop fs -mkdir /test

在浏览器中能看到,刚创建的文件夹,就表示成功

5.3 停止

./sbin/stop-dfs.sh -

配置yarn

6.1 配置mapred-site.xml

cd /opt/hadoop-2.6.5/etc/hadoop/ cp mapred-site.xml.template mapred-site.xml vi mapred-site.xml <configuration> <!-- 通知框架MR使用YARN --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>6.2 配置yarn-site.xml

vi yarn-site.xml <configuration> <!-- Site specific YARN configuration properties --> <!-- reducer取数据的方式是mapreduce_shuffle --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>6.3 yarn启动与停止

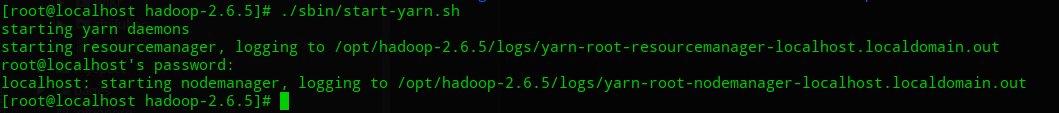

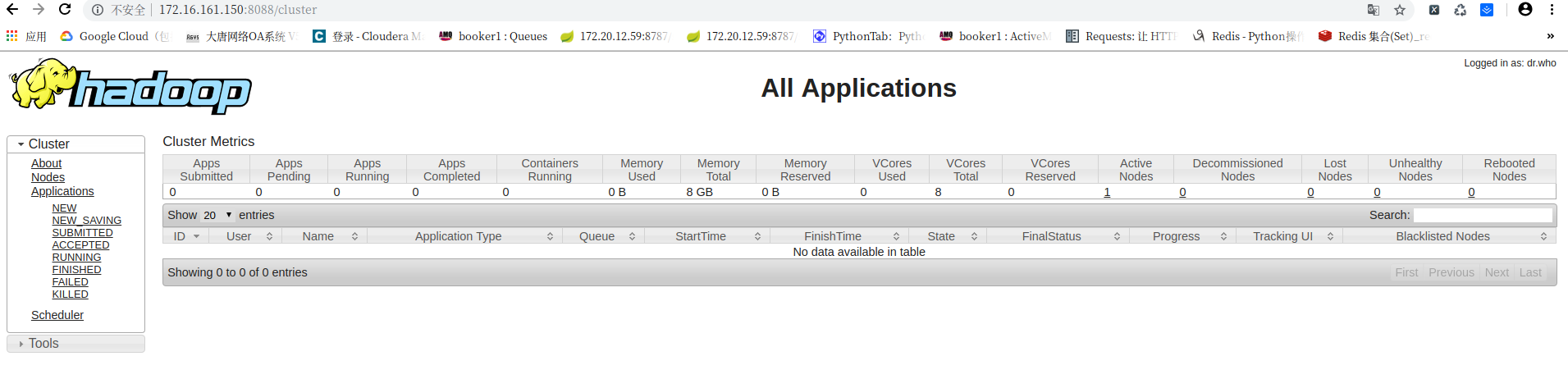

cd /opt/hadoop-2.6.5 ./sbin/start-yarn.sh 浏览器输入:http://172.16.161.150:8088

浏览器输入:http://172.16.161.150:8088

停止

./sbin/stop-yarn.sh -

查看进程

[root@localhost hadoop-2.6.5]# jps 2032 SecondaryNameNode 1896 DataNode 1787 NameNode 2365 ResourceManager 2749 Jps 2638 NodeManager [root@localhost hadoop-2.6.5]#

单机hadoop部署完毕

安装hive

- 解压hive压缩包

tar -zxvf apache-hive-2.3.4-bin.tar.gz -C /opt/配置hive环境变量

export HIVE_HOME=/opt/apache-hive-2.3.4-bin export PATH=$PATH:$HIVE_HOME/bin -

配置hive 2.1 创建HDFS目录

在 Hive 中创建表之前需要创建以下 HDFS 目录并给它们赋相应的权限。 hdfs dfs -mkdir -p /user/hive/warehouse hdfs dfs -mkdir -p /tmp/hive/ hdfs dfs -chmod 777 /user/hive/warehouse hdfs dfs -chmod 777 /tmp/hive #测试是否成功 $HADOOP_HOME/bin/hadoop fs -ls /user/hive/ mkdri /opt/apache-hive-2.3.4-bin/tmp chmod 777 mkdri /opt/apache-hive-2.3.4-bin/tmp2.2 hive-site.xml

cd /opt/apache-hive-2.3.4-bin/conf cp hive-default.xml.template hive-site.xml vi hive-site.xml <?xml version="1.0" encoding="UTF-8" standalone="no"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://172.16.161.1:3306/hive?&createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>yuan121423</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>datanucleus.schema.autoCreateAll</name> <value>true</value> </property> <property> <name>hive.metastore.schema.verification</name> <value>false</value> </property> <property> <name>hive.server2.authentication</name> <value>NOSASL</value> <description> Expects one of [nosasl, none, ldap, kerberos, pam, custom]. Client authentication types. NONE: no authentication check LDAP: LDAP/AD based authentication KERBEROS: Kerberos/GSSAPI authentication CUSTOM: Custom authentication provider (Use with property hive.server2.custom.authentication.class) PAM: Pluggable authentication module NOSASL: Raw transport </description> </property> </configuration> # 将hive-site.xml文件中的${system:java.io.tmpdir}替换为hive的临时目录2.3 hive-env.sh

cp hive-env.sh.template hive-env.sh vi hive-env.sh # Set HADOOP_HOME to point to a specific hadoop install directory # HADOOP_HOME=${bin}/../../hadoop # Hive Configuration Directory can be controlled by: # export HIVE_CONF_DIR=修改成

HADOOP_HOME=/opt/hadoop-2.6.5 export HIVE_CONF_DIR=/opt/apache-hive-2.3.4-bin/conf2.4 初始化

schematool -initSchema -dbType mysql [root@localhost conf]# schematool -initSchema -dbType mysql SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/opt/apache-hive-2.3.4-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/opt/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://172.16.161.1:3306/hive?createDatabaseIfNotExist=true Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hive Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. Starting metastore schema initialization to 2.3.0 Initialization script hive-schema-2.3.0.mysql.sql Initialization script completed schemaTool completed -

启动

nohup hive --service metastore & nohup hive --service hiveserver2 &

安装问题

- 报错:

Error: Could not open client transport with JDBC Uri: jdbc:hive2://172.16.161.150:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate root (state=08S01,code=0)解决:

<property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property>zookeeper 环境搭建

1 解压

```shell script

tar zxvf apache-zookeeper-3.5.6.tar.gz

#### 安装HBase

1 先下载HBase

**[hbase下载地址](https://www-us.apache.org/dist/hbase/)**

> 我的hadoop2.6.5 ,下载了hbase1.3.6

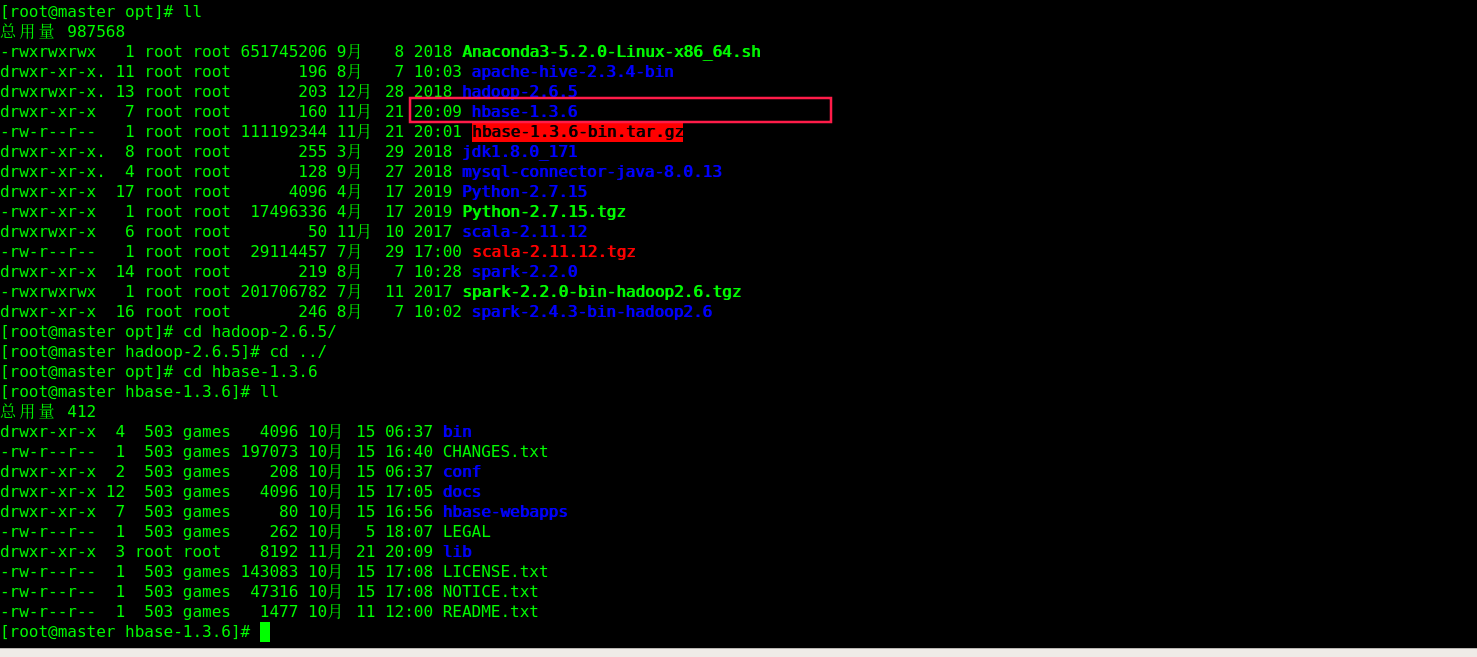

2 解压下载的文件,进入hbase压缩包存放的目录

```python

tar zxvf ./hbase-1.3.6-bin.tar.gz

cd hbase-1.3.6/conf

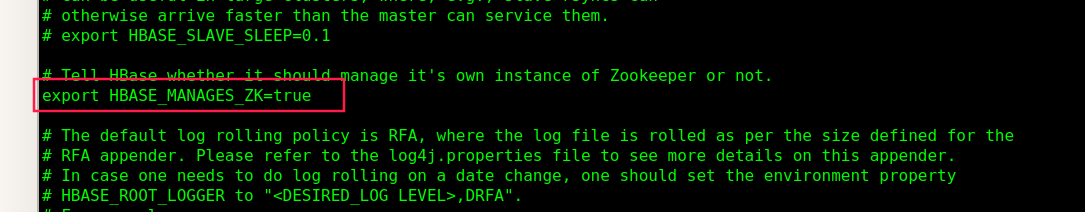

3 编辑hbase-env.sh

vi hbase-env.sh

# 设置,是用hbase自带的ZooKeeper:true,如果使用独立的ZooKeeper:false

HBASE_MANAGES_ZK=true

# 设置JAVA_HOME为实际jdk路

JAVA_HOME=/opt/jdk1.8.0_171/

4 编辑hbase-site.xml文件

vi hbase-site.xml

<configuration>

<property>

<name> hbase.rootdir </name>

<value>hdfs://master:9000/hbase</value>

<description> hbase.rootdir是RegionServer的共享目录,用于持久化存储HBase数据,默认写入/tmp中。如果不修改此配置,在HBase重启时,数据会丢失。此处一般设置的是hdfs的文件目录,如NameNode运行

在namenode.Example.org主机的9090端口,则需要设置为hdfs://namenode.example.org:9000/hbase

</description>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>此项用于配置HBase的部署模式,false表示单机或者伪分布式模式,true表不完全分布式模式。

</description>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,salve01,salve02</value>

<description>此项用于配置ZooKeeper集群所在的主机地址。examplel、 example2、example3是运行数据节点的主机地址。

</description>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/var/zookeeper</value>

<description>此项用于设置存储ZooKeeper的元数据,如果不设置默认存在/tmp下,重启时数据会丢失。

</description>

</property>

<property>

<name>hbase.master</name>

<value>hdfs://master:60000</value>

<description>hbasemaster的主机和端口</description>

</property>

</configuration>

5 regionservers配置

```shell script vi regionservers localhost #修改后 master salve01 salve02

6 分发hbase文件夹到每个节点

```shell script

scp -r hbase-1.3.6 root@salve01:/opt/

scp -r hbase-1.3.6 root@salve02:/opt/

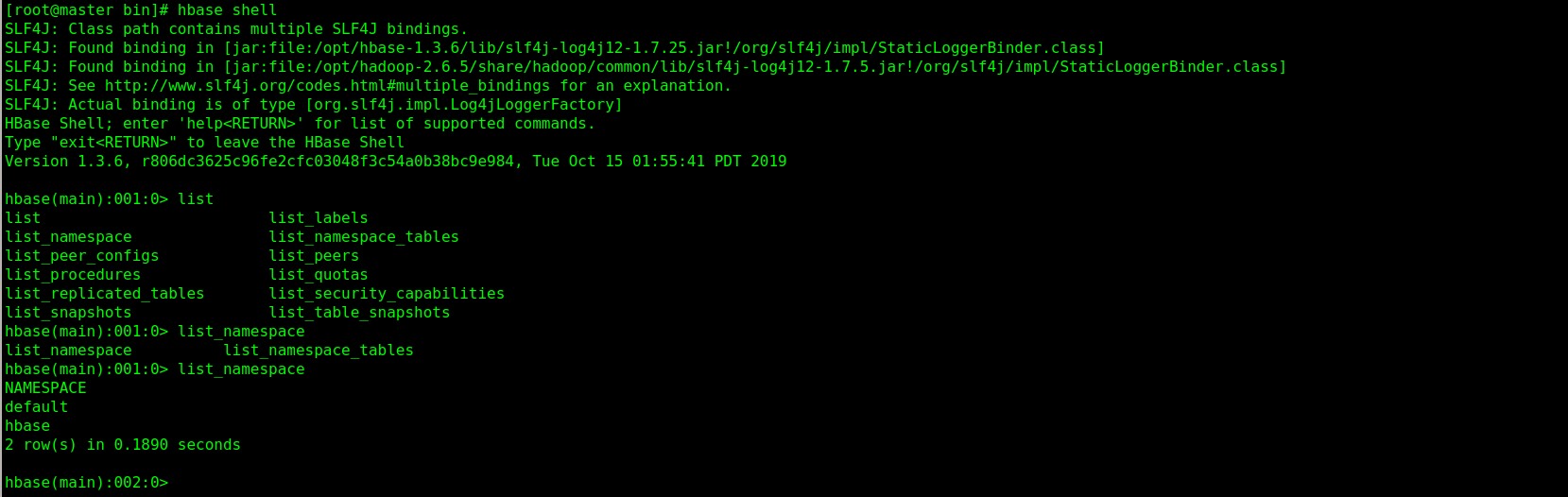

7 启动hbase,启动之前保证hdfs已经启动

```shell script

cd /opt/hbase-1.3.6/bin

./start-hbase.sh

8 启动完成后效果

> 在 HBase 0.98.x 以上, HBase Web UI 的端口从主节点的 60010 和 RegionServer 的 60030 变化为 16010 和 16030

9 配置hbase系统环境变量,方便操作

```shell script

vi /etc/profile

export HBASE_HOME=/opt/hbase-1.3.6

export PATH=$PATH:$HBASE_HOME/bin

source /etc/profile

10 让python3连接hbase

```shell script

./hbase-daemon.sh start thrift

```python

import happybase

# 创建连接

conn = connection = happybase.Connection(host="172.16.161.152",port=9090,timeout=None,autoconnect=True,table_prefix=None,table_prefix_separator=b'_',compat='0.98', transport='buffered',protocol='binary')

# 查询所有表

tables = conn.tables()

print(tables)

# 建表

families = {

'user_info': dict(),

'history': dict()

}

# 创建表

conn.create_table('table_name', families)

tables = conn.tables()

print(tables)

# 删除表,需要先禁表

connection.delete_table('table_name',disable=True)

# conn.disable_table('table_name')

# conn.delete_table('table_name')

tables = conn.tables()

print(tables)

分布式集群搭建

hadoop2.6.5 centos7

- 环境准备,修改主机名的时候不要有_/特殊字符

主机名称 IP 角色 master 172.16.161.152 名称节点 slave01 172.16.161.154 数据节点 slave02 172.16.161.155 数据节点 - 域名解析和关闭防火墙(所有机器)

vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.161.152 master

172.16.161.154 slave01

172.16.161.155 slave02

关闭 selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

setenforce 0

关闭 iptables

service iptables stop

chkconfig iptables off

各个机器检查防火墙,要关闭,不关闭后面很多问题

Ubuntu(ubuntu-12.04-desktop-amd64)

查看防火墙状态:ufw status

关闭防火墙:ufw disable

---------------------------------------------------------------

centos6.0

查看防火墙状态:service iptables status

关闭防火墙:chkconfig iptables off #开机不启动防火墙服务

--------------------------------------------------------------

centos7.0(默认是使用firewall作为防火墙,如若未改为iptables防火墙,使用以下命令查看和关闭防火墙)

查看防火墙状态:firewall-cmd --state

关闭防火墙:systemctl stop firewalld.service

禁止firewall开机启动: systemctl disable firewalld.service

- 配置jdk环境变量(所有机器上),下载jdk并解压,放在/opt/目录下

vi /etc/profile

export JAVA_HOME=/opt/jdk1.8.0_181

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

source /etc/profile

- 配置ssh免密登录(所有机器上),没有ssh,要先安装

# 这里以172.16.161.152为例,每台都要这样操作,要注意是第一次登录,需要输入密码

1) ssh-keygen

2) 分别ssh另外两台虚拟机

ssh-copy-id -i .ssh/id_rsa.pub root@172.16.161.154

ssh-copy-id -i .ssh/id_rsa.pub root@172.16.161.155

- 下载hadoop-2.6.5,并解压到/opt/下

/opt/hadoop2.6.5

- 修改hadoop-env.sh文件

cd /opt/hadoop-2.6.5/etc/hadoop/

vi hadoop-env.sh

将:export JAVA_HOME=${JAVA_HOME}

修改为:export JAVA_HOME=/opt/jdk1.8.0_171 #修改为jdk目录

- 修改slaves文件,添加datanode节点主机名

vi slaves

slave01

slave02

- 修改masters文件,添加namenode节点主机名

vi masters

master

- 修改core-site.xml文件

vim core-site.xml #内容如下

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/opt/hadoop-2.6.5/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://172.16.161.152:9000</value>

</property>

</configuration>

- 修改hdfs-site.xml文件

vi hdfs-site.xml

<configuration>

<property>

<name>dfs.name.dir</name>

<value>file:/opt/hadoop-2.6.0/dfs/name</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:/opt/hadoop-2.6.0/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

- 修改mapred-site.xml文件

vi mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

- 修改yarn-site.xml文件

vi yarn-site.xml

<property>

<name>yarn.resourcemanager.address</name>

<value>master:18040</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:18030</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:18088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:18025</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:18141</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

- 将配置好的hadoop复制到其他节点

scp -rp /opt/ root@slave01:/

scp -rp /opt/ root@slave02:/

- 初始化hadoop集群(所有机器)

cd /opt/hadoop-2.6.5/bin

./hadoop namenode -format

- 启动hadoop集群

cd /opt/hadoop-2.6.5/sbin

./start-all.sh

15.手动搭建集群,如果要跑大量MR任务,是需要配置资源,不然会报:is running beyond virtual memory limits. Current usage: 142.0 MB of 1 GB physical memory used; 2.2 GB of 2.1 GB virtual memory used. Killing container.

yarn-site.xml

<!-- YARN分配 6G-->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>6144</value>

</property>

<!--每个容器 1G-->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<!-- 虚拟内存上限= 4 * 0.7 = 2.8 GB -->

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>0.7</value>

</property>

mapred-site.xml

<!-- 分配的物理RAM总量= map 4 GB -->

<property>

<name> mapreduce.map.memory.mb</name>

<value>4096</value>

</property>

<!-- 分配的物理RAM总量= reduce 4 GB -->

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>4096</value>

</property>

<!-- JVM堆大小应设置为低于上面定义的Map和Reduce内存 -->

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx3072m</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx3072m</value>

</property>

ERROR

- put: Call From master/172.16.161.152 to master:9000 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

vi core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.16.161.152:9000</value>

</property>

- java.net.NoRouteToHostException: 没有到主机的路由 at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

保证每台机器,能通过主机名互通

检查1, 每台机器上hosts

检查2, slaves,masters配置

groupadd hadoop

useradd -g hadoop hadoop

vi /etc/sudoers

hadoop ALL=(ALL:ALL) ALL